Graphic by R. Nial Bradshaw. ©2017.

Graphic by R. Nial Bradshaw. ©2017.

By Tricia Wachtendorf and James Kendra

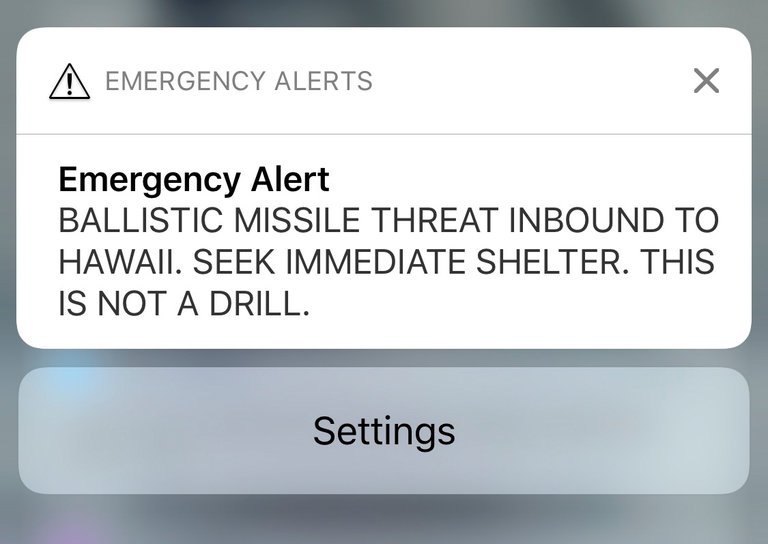

It might be understandable to feel a degree of empathy for the Hawaii Emergency Management Agency (HI-EMA) employee who sent a false ballistic missile alert on January 13—many of us have felt the sinking feeling after hitting reply all on an email meant for one person.

Although the event stoked outrage, the explanation seemed reasonable: the wrong option was clicked in a screen menu. The response to the false alert by HI-EMA Administrator Vern Miyagi (who has since resigned) was both swift and seemingly appropriate.

“It’s my responsibility, so this would be my fault,” he stated in a press conference later that day.

Both Miyagi and Ige said human error caused the false alert, indicating that an employee (who has since been fired), had accidently selected the wrong alert interface option while testing the system. Moreover, there was no protocol to officially retract the alert using the same interface. Although the agency began notifying counties and departments, such as the Hawaii Police Department, less than five minutes after the alert was sent, the only way to quickly inform the public about the mistake was via social media, which wasn’t monitored by everyone, and answering calls from concerned individuals.

It was a full 38 minutes after the initial warning was issued—a length of time that felt like an eternity for those on the Islands who feared death or destruction, yet a relatively short time to turn the bureaucratic wheels of government—before a cancellation message was finally sent through the same automated alert system. To make matters worse, the governor didn’t know his Twitter password, just when he needed it the most. Another avenue for prompt correction of the error was closed off.

Two weeks later, however, a HI-EMA report on the incident, as well as a Federal Communications Commission report](http://bit.ly/2tMpmcU indicated there were factors beyond human error at play.

According to the reports, the initial explanations of what transpired weren’t accurately represented. The employee who issued the alert claimed he had not heard the words “exercise, exercise, exercise” that normally precede a test drill and that he really believed a ballistic missile was actually headed to the Islands. The HI-EMA report stated it the employee had previously mistaken drills for real-world emergencies.

A screenshot from a mobile device shows the erroneous missile warning issued in January.

What can we take away from all this? First, the findings of these preliminary reports can have the unfortunate potential to over-emphasize human error. By placing the blame for this deeply upsetting event on individuals and citing human error as the primary cause of the crisis, the focus is shifted away from the larger organizational, even strategic, context.

Human error aside, the interface problem was still present. The fact that a false alert could result from a selection error is just as much of a problem today as it was on January 13.

There is an entire science that explains how technology and technical system designs can make human errors, such as the one in Hawaii, much more likely. There is also a long history of failing to improve designs even when repeated errors take place. Reply, for instance, is still located next to Reply all. We blame the sender, keeping the system interface intact.

Interface designers have criticized the placement of the test and real-world missile attack options in the same drop-down menu, but even if this crisis is enough to change that particular drop-down option, there is little assurance that future technical design will account for end user error. Actions with serious consequences should require some extra deliberation and counter action and not arise from a wavering finger, a momentary lack of attention, or someone’s faulty sense making. Although, HI-EMA has indicated that a second person will now be required to confirm issued alerts, we think the incident in Hawaii should provoke a wholesale reconsideration of the potential for error, and not just in the emergency management realm.

The slow recovery from the error is also significant, and generates more questions than we presently have answers for. While HI-EMA could tweet a retraction, sending a retraction by phone alert—one that would reach all who had received the erroneous message—was thought to involve many steps, including contacting Federal Emergency Management Agency and programming a retraction message.

Why the subsequent confusion? There were conflicting reports on whether Hawaii officials contacted FEMA for permission to retract the alert. A news report said that FEMA permission was not needed, and that HI-EMA was seeking guidance on how to handle the situation. But the HI-EMA report stated they were getting “authorization.” Clearly, there were misunderstandings. We can now see some form of what the sociologists Lee Clarke and Charles Perrow termed “prosaic organizational failure,”— a belief that systems were in place, that those systems worked, and a lack of awareness that the systems were tightly coupled. Once a mistake was made, turning back was difficult and time-consuming.

By now, most researchers are skeptical of human error as the cause of accidents without looking at larger contexts. What we do see, is a system’s inability to anticipate, manage, and recover from such a mistake.

Diane Vaughan’s work on the Challenger disaster revealed that the 1986 tragedy resulted not from mere human error, but rather from factors deeply connected to organizational culture and hidden aspects of technological interaction. We might consider her careful ethnographic approach to this topic, some twenty years after her book was first published.

Until the false ballistic missile alert, the system worked, but only in people’s imaginations. Any individual can make a mistake—in fact, we should expect that. The new plan to have two people on hand for subsequent tests is an improvement, but this incident should provoke a much deeper examination of this key element of our emergency management system.

It’s not likely that HI-EMA is the only agency in the United States to have such an interface, or to have different understandings of their role than their colleagues at FEMA have. Every aspect of the system should now be examined: from software and interface design, to procurement policies, to the fact that there is virtually no research at all on how emergency officials interact with their technologies or incorporate those technologies into larger multi-organizational, multi-jurisdictional systems.

Perhaps above all else, we can take a fresh look at the fragmented emergency management system in the United States and reexamine the roles, responsibilities, and expectations of agencies at all levels of government. Let’s not make the mistake of simply chalking what happened in Hawaii up to human error. We would be wise to see this as a signal event for possible future breakdowns.

Tricia Wachtendorf is a professor in the Department of Sociology and Criminal Justice at the University of Delaware. She is the co-director of the Disaster Research Center and author of American Dunkirk: The Waterborne Evacuation of Manhattan on 9/11.