Assessing COVID-19 Vaccine Misinformation Interventions Among Rural, Suburban, and Urban Residents

Publication Date: 2022

Abstract

Misinformation about COVID-19 vaccines has contributed to around 22% of the adult population in the United States being unwilling to vaccinate themselves against the disease (Khubchandani et al., 20211). As part of an effort to reduce the impact of vaccine misinformation, this study aimed to examine and compare how commenting on a Facebook misinformation post or viewing an artificial intelligence (AI) fact-check label affected attitudes toward COVID-19 vaccination. We found that both interventions were effective at promoting positive attitudes toward vaccination compared to the misinformation exposure condition. However, the intervention effects manifested differently depending on participants’ residential locations, such that the commenting intervention was more effective among suburban users and the AI fact-check label intervention was more effective for urban users. Among rural users the commenting intervention encouraged a modest increase in positive attitudes toward vaccination and the AI fact-check label had no significant effects. These findings suggest that although social media platform-based misinformation interventions may be promising tools, the interventions should be developed in a more sophisticated way to address individual differences among users in different locations.

Introduction

Despite the medical consensus that the COVID-19 vaccine is to the best way protect oneself and one’s family, friends, and community from the disease, vaccine hesitancy is persistently high in the United States (Coustasse et al., 20212). Amongst other factors, misinformation and conspiracy theories have played a prominent role in increasing some people’s resistance to taking the vaccine. Conspiratorial misinformation has been spread particularly through social media.

In an effort to counter conspiratorial health-related misinformation, researchers in the field of media have studied various misinformation interventions applied to social media platforms. These interventions include providing news stories relevant to the misinformation topic on social media newsfeeds (Bode & Vraga, 20153), real-time user comments that correct misinformation (Vraga, et al., 20214), or a false tag attached as a web browser extension (Lee, 20205). For this study, we define social media platform-based interventions as efforts to mitigate the negative impact of misinformation about the COVID-19 vaccine.

The current research examined two types of social media platform-based interventions. In the first intervention, which we called the “commenting on misinformation post” condition, users were required to leave comments on a misinformation post. This intervention gave users control over the process and encouraged more active engagement with the misinformation. In the second intervention, which we called “artificial intelligence (AI) fact-check label” condition, users viewed a fact-check label produced by the AI. In this case, user engagement was limited because users were not given the opportunity to comment. Given these differences between the two interventions, we define a commenting intervention as a “user agency-based misinformation intervention” and AI fact-checking labeling as a “machine agency-based misinformation intervention.”

This experiment allowed us to analyze the degree to which these interventions are able to protect users from the negative impacts of consuming conspiratorial misinformation on social media. In addition, we also examined how urban, suburban, and rural users responded to the misinformation interventions and whether their responses varied. Addressing this additional question provided insights on the possibility of targeting misinformation interventions to specific groups.

Literature Review

Commenting as User Agency-Based Misinformation Intervention

One way to defend oneself against misinformation is to cognitively scrutinize the misleading content rather than processing it superficially and accepting it as is (Pennycook et al., 20206). Previous research shows that the act of expressing oneself on social media leads to greater cognitive elaboration (Oeldorf-Hirsch, 20187; Yoo et al., 20178), which means that individuals process the information more deeply by linking it to their existing knowledge or paying closer attention to it (Chaiken et al., 19959). The challenge now is to identify those social media tools which activate users’ cognitive elaboration processes when they are exposed to misinformation.

As explained earlier, commenting on a misinformation post is a user agency-based intervention because it gives users control over communicating about the misinformation content (i.e., users control whether to simply indicate their agreement on the misinformation post or add specific thoughts in the comments section). In fact, social media platforms not only function as sources of information, but also motivate users to express themselves, allowing them to exercise agency. The interactive, bidirectional features of social media communication facilitate expression (Halpern & Gibbs, 201310). Self-expression on social media additionally takes various forms—for example, leaving comments on social media posts, replying to others’ opinions, or creating posts on one’s newsfeed. Further, the interactive features of social media enable one’s expression to be displayed to the public simultaneously, including one’s social networks (Gil de Zúñiga et al., 201411). Hence, by expressing one’s thoughts online rather than passively consuming information, users may conceive of themselves as issue-involved participants who take action rather than fence-sitters (Gil de Zúñiga et al., 2014).

AI Fact-Check Label as Machine Agency-Based Misinformation Intervention

In contrast to commenting, AI fact-checking is an intervention solely driven by system-generated rules. This intervention labels the misinformation as false based on an automatic AI system’s decision-making process. Few studies have explored the effects of fact-checking interventions attributed to AI. Zhang, Featherstone, and colleagues (202112) showed that an AI fact-check label (i.e., “This post is falsified. Fact-checked by Deep Learning Algorithms”) was rated lower by users than fact-check labels produced by experts from universities and health institutions. Even so, both types of fact-check labels were generally effective at mitigating the negative impact of vaccine misinformation (Zhang, Featherstone, et al., 2021).

Studies have found that individuals tend to believe that machines are unbiased and objective entities—a concept known as machine heuristic (Sundar, 200813). Research has also shown that individuals tend to view algorithmic judgment as superior to decisions made by humans alone (Dawes et al., 198914). These two dynamics suggest that users may perceive AI fact-check labels as objective, unbiased, and reliable. If so, AI fact-check labels have the potential to be effective tools in the effort to reduce the impact of online misinformation about the COVID-19 vaccine and increase positive attitudes toward COVID-19 vaccination.

Accounting for Differences Between Rural, Suburban, and Urban Users Residents

Exploring differences by users’ residential locations is particularly relevant to the COVID-19 vaccination issue, given that residents in rural and urban areas in the United States tend to show a large COVID-19 knowledge gap (Zhang, Zhu, et al., 202115). Research has revealed that COVID-19 was transmitted at a higher rate in densely populated urban areas at the initial stage of the pandemic (Amram et al., 202016), but as the pandemic progressed, rural residents faced more risks of COVID-19 (Paul et al., 202017).

Several factors have exacerbated the risks among residents from rural counties, which include lack of accessibility to credible and comprehensive news media (termed as “news deserts”), lower education, lower income, and poorer health care access than urban and suburban residents (Abernathy, 201818; Henning-Smith, 202019). Particularly in southern states like Alabama, where many rural locations lack adequate healthcare services, disparities in COVID-19 health literacy are more pronounced (Mueller et al., 202120). A recent statewide survey demonstrated this urban-rural gap by showing that urban residents in Alabama were more likely to understand information about COVID-19 and have less difficulty accessing information about it than their rural counterparts (Crozier et al., 202121).

Although the rural-urban distinction does not capture all sociodemographic differences, it adds a nuanced perspective on how misinformation interventions operate differently to enhance positive attitudes toward the COVID-19 vaccine, depending on individuals’ residential locations. For example, rural residents’ low baseline levels of awareness about the benefits of COVID-19 vaccination may mitigate the effects of misinformation interventions in the current study. Given the increasing demand to design tailored misinformation intervention strategies to specific populations, examining differences among individuals from different residential locations can provide practical utility.

Research Design

Research Questions

Our three research questions are:

- Do users who comment on a misinformation post have more favorable attitudes toward COVID-19 vaccinations than users who only read the post and do not comment it?

- Do users who see an AI fact-check label on a misinformation post have more favorable attitudes toward COVID-19 vaccinations than users who read the same post without the label?

- How do participants’ residential areas (rural vs. suburban vs. urban) moderate the effects of the experimental intervention conditions (misinformation comments condition and AI fact-checking label condition) on attitudes toward COVID-19 vaccination?

Data, Methods, and Procedures

We conducted a one-way, between-subjects online experiment comparing three conditions: (1) the comments condition, (2) the AI fact-check label condition, and (3) the misinformation exposure only condition. These conditions are fully defined in Table 1. Participants were randomly assigned to one of the three conditions. This experimental design allowed us to see differences in attitudes toward COVID-19 vaccination between the experimental intervention conditions.

Table 1. Treatment Conditions

| Treatment Conditions | Definitions |

| Comments condition | Participants in this condition were required to leave comments on a misinformation post. |

| AI fact-check label condition | Participants in this condition were required to see an AI fact-checking label attached to a misinformation post. |

| Misinformation exposure condition | Participants in this condition were directed to see a misinformation post only. |

Participants across the conditions were asked to consider the scenario that a series of Facebook posts were uploaded in one of their personal Facebook community groups. The misinformation post stated, “Did anyone hear that Bill Gates is using the COVID-19 vaccine to plant microchips in people?” It also included a picture of Mr. Gates. A fictitious user’s name was used for the misinformation post to prevent any confounding effects that might occur from sources. In addition, the experiment also included four filler posts alongside the misinformation post (randomly ordered) to enhance external validity of this study. All participants were given a minimum of 30 seconds to read all the Facebook posts before they were allowed to move to the posttest questions.

In the comments condition, participants were required to leave short comments on the misinformation post and informed that their comments would be shared with other community members of the Facebook groups. More specifically, we manipulated the misinformation post so that it directly asked participants to share their thoughts in the comments section. Participants in this condition were not exposed to others’ comments, however, but only forced to leave their own thoughts on the misinformation post.

For the AI fact-check label condition, participants were first shown an AI label which stated, “Proceed with caution: AI system detects that this web page contains some false claims.” Then, they were exposed to a series of Facebook posts including the misinformation post. The fact-check label we used for this study did not specifically indicate which post was false. This is the standard practice used by several fact-checking browser extensions. For example, NewsGuard, a tool that rates the credibility of online websites, uses the warning label, “Proceed with Caution: This website severely violates basic journalistic standards,” which is similar to the warning in our experiment. In addition, our study’s fact-check label explicitly indicated that AI had detected the false claims. Participants in this treatment group were prevented from commenting.

For the misinformation exposure condition, participants were simply instructed to read the Facebook posts. They received no warning message nor were they allowed to comment.

Sampling and Participants

Upon agreeing to participate, participants proceeded to a series of screening questions. These questions included residential area in Alabama (rural, urban, and suburban areas via stratified sampling with the targeted percentage of participants residing in rural areas as 50% and urban and suburban as 25% each); age (over 18 years old); and whether participants were Facebook account holders. Eligible participants were then directed to answer a pretest questionnaire measuring preexisting beliefs in the false statement about Bill Gates’ plan to use the COVID-19 vaccine to implant microchips in people, general attitudes toward COVID-19 vaccines, COVID-19 vaccine skepticism, and COVID-19 vaccine uptake. Participants were then randomly assigned to one of the three experimental conditions and answered posttest questions about their attitudes toward COVID-19 vaccination. Lastly, participants answered demographic questions such as gender, education, race, and political orientation. At the end of the study, they were debriefed about the study’s purpose.

Participants were recruited between February and March 2021 via Amazon Mechanical Turk (MTurk). Amazon MTurk is widely used to recruit diverse participants (Casler et al., 201322) and known to be a comparable method for recruiting rural populations to other conventional sample methods (Saunders et al., 202123). As stated above, all participants were Alabama residents. The final sample was 274 (N = 132 rural participants, N = 64 urban participants, N = 78 suburban participants).

Data Analysis

A one-way analysis of covariance (ANCOVA) was employed to test a difference in attitudes toward COVID-19 vaccination between the experimental conditions and a two-way ANCOVA was performed to see the interaction between residential locations and the experimental conditions with control variables included. The control variables used in this study were preexisting beliefs in the misleading statement, general attitudes toward the COVID-19 vaccine, COVID-19 vaccine skepticism, COVID-19 vaccine uptake, and political orientation.

Ethical Considerations

The data was collected after receiving approval from the University of Alabama Institutional Review Board.

Findings

A significant difference in attitudes toward COVID-19 vaccination was evident among the three experimental groups, according to a one-way ANCOVA (F(1, 266) = 3.40, p = .04, ηp2 = .03). Post hoc tests with Fisher’s least significant differences (LSD) revealed that participants who left comments on the misinformation message on Facebook had more positive attitudes toward vaccination (Madjusted = 3.55, SE = .10), compared to those who were simply exposed to the misinformation post (Madjusted = 3.24, SE = .10; p = .03). Participants who saw the AI fact-checking label also held more positive attitudes toward vaccination (Madjusted = 3.58, SE = .12) than those who saw the misinformation post only, and this difference was significant (p = .03). There was also no significant difference between the AI fact-check label condition and the comments condition (p = .86), suggesting that both interventions produced similar effects.

Next, a two-way ANCOVA was performed to assess how place of residence mediated the effect of the experimental condition on attitudes toward COVID-19 vaccination. Follow-up analyses of the simple main effect analysis using LSD comparisons showed that rural participants’ attitudes toward COVID-19 vaccination were modestly affected by the two interventions. For example, rural participants who left comments on the misinformation post showed increased positive attitudes toward vaccination (Madjusted = 3.57, SE = .14) compared to rural participants who were exposed to the misinformation post only (Madjusted = 3.12, SE = .14; p = .03). However, there was no statistical differences between rural participants who saw the AI fact-check label (Madjusted = 3.53, SE = .18) and those who only saw the misinformation post (p = .06). Although both interventions had only limited effects on rural residents, the commenting intervention seemed to encourage slightly more positive attitudes toward vaccination.

For suburban populations, commenting elicited a pronounced effect on positive attitudes toward COVID-19 vaccination. Significance was found for the comments condition (Madjusted = 3.98, SE = .19) relative to the AI fact-check label condition (Madjusted = 3.38, SE = .19; p = .02) or the misinformation exposure condition (Madjusted = 3.39, SE = .19; p = .01). No significant difference, however, was found between the AI fact-check label condition and the misinformation exposure condition (p = .86).

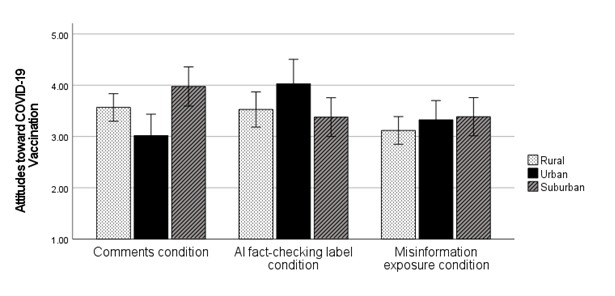

In contrast to rural and suburban populations, the AI fact-check label was the most effective among urban populations. Urban participants in the AI fact-check label condition were significantly more likely to show positive attitudes toward COVID-19 vaccination (Madjusted = 4.03, SE = .24) than those in the comments condition (Madjusted = 3.02, SE = .21; p = .003) or those in the misinformation exposure condition (Madjusted = 3.33, SE = .19; p = .03). No difference was found between urban participants in the comments condition and in the misinformation exposure condition (p = .27). Table 2 and Figure 1 present the moderating effects of residential location.

Table 2. Descriptive Statistics for Attitudes toward COVID-19 Vaccination by Experimental Conditions and Residential Areas

| Comments Condition (N=99) | AI Fact-Checking Label Condition (N=73) | Misinformation Exposure Condition (N=102) | |

| Residential Areas | Madjusted (SE) | Madjusted (SE) | Madjusted (SE) |

| Rural (N=132) | 3.57 (.14)a | 3.53 (.18)b | 3.12 (.14)b |

| Urban (N=64) | 3.02 (.21)c | 4.03 (.24)d | 3.33 (.19)c |

| Suburban (N=78) | 3.98 (.19)e | 3.38 (.19)f | 3.39 (.19)f |

Note: Mean values sharing identical subscripts within rows are not significantly different at the p < .05 level. The results controlled for preexisting beliefs in the misleading statement, general attitudes toward COVID-19 vaccine, COVID-19 vaccine skepticism, COVID-19 vaccine uptake, and political orientation.

Figure 1. Comparison of Adjusted Mean Scores for Experimental Conditions by Residential Areas on Attitudes toward COVID-19 Vaccination

Conclusion

The findings of the current study provide several important implications in both theoretical and practical terms. First, our study shows that platform-based anti-misinformation interventions, manipulated in terms of user or machine-based agency, can modestly improve attitudes toward COVID-19 vaccination. Similar interventions may be promising ways to address other types of health misinformation on social media. Our findings also suggest that encouraging users to scrutinize their social media feeds more actively can mitigate the impact of viewing misinformation. Although it was beyond the scope of our study to assess the cognitive processes that the interventions motivated in users, we feel that this is a fruitful topic for future research.

More specifically, the commenting condition results demonstrates the applicability of the “sender effect” (Pingree, 2007) as a theoretical framework to explain how users might come to hold attitudes toward vaccination when leaving their thoughts about the misinformation post. In line with the sender effect, which has demonstrated that expression yields cognitive benefits (Pingree, 2007; Shah, 2016), empirical research has found that the act of expression in the social media environment facilitates cognitive activities (e.g., Oeldorf-Hirsch, 2018) and motivates users to become active participants (Gil de Zúñiga et al., 2014). Taking these previous findings into account, we suggest that commenting on the misinformation post might encourage users to pause and rethink misleading content, instead of processing the conspiratorial claim heuristically.

We also found that the AI fact-check label was effective at promoting positive attitudes toward vaccination, which aligns with previous research on the effectiveness of fact-check labels (e.g., Lee, 2020; Zhang, Featherstone, et al., 2021). Moreover, explicitly mentioning that the fake post was detected by to AI appeared to produce positive effects on participants in this condition. This suggests that users might generally perceive AI as objective, neutral, and accurate, as posited by the machine heuristic (Sundar, 2008). We speculate that the AI fact-check label may trigger users to be skeptical of social media posts in their feed. Indeed, our experiment suggests that the AI fact-check label primed participants to look for falsehoods in the social media posts they viewed and that their active engagement in scrutinizing the misinformation post contributed to more positive attitudes toward vaccination.

However, the effectiveness of the misinformation interventions varied by users’ residence (rural vs. suburban vs. urban). Specifically, for rural respondents, the interventions did not generate salient effects on promoting positive attitudes toward COVID-19 vaccination. Although leaving comments on the misinformation post resulted in significantly more favorable attitudes toward vaccination compared to the misinformation exposure condition, there were no significant differences between the other conditions. This suggests that the commenting intervention would also have limited effects among people in rural locations. The commenting intervention elicited a pronounced effect among suburban residents, given the significant differences from the AI fact-checking label and the exposure conditions.

For urban populations, it was the AI fact-checking label which emerged as the effective tool for promoting positive attitudes toward vaccination. The limited overall effects of the AI fact-check label could be attributed to a gap between rural, suburban, and urban residents in understanding the operation of AI. In fact, additional analyses showed that the urban (M = 3.67, SD = 1.64; p = .003) and suburban (M = 4.12, SD =1.52, p < .001) sample reported higher education than the rural sample (M = 3.11, SD = 1.33), respectively. Moreover, relevant to such differences in education levels among urban, suburban, and rural participants, the lack of media literacy—more specifically, a lack of knowledge about AI—particularly among rural residents might also contribute to the ineffectiveness of the misinformation interventions designed on social media platforms. This finding supports efforts to provide more detailed explanations to users on the AI mechanism of detecting misinformation, referred to as explainable AI (Miller et al., 201724; Rai, 202025). Our findings support recent research which suggests that the criteria for AI fake news detection should be revealed transparently to reduce uncertainty and increase trust (Liu, 202126). In addition, we recommend employing more engaging and interactive interface systems that can elicit significant effects even for rural populations, beyond one-time commenting. For example, creating discussion forums on social media where rural residents can share their thoughts and interact with others on a regular basis could be an effective way that can prevent individuals from falling for misinformation.

In sum, our findings suggest that a one-size-fits-all approach to countering misleading or false social media posts may not be suitable because individual differences mediate how users process misinformation and the effectiveness of interventions (Chou et al., 202027). Future research should consider addressing how individuals comprehend the AI fact-checking interventions and the cognitive effects of commenting on posts. It is also important to compare whether the AI fact-check label encourages users to scrutinize posts to the same degree as leaving comments in order to consider different effects of misinformation interventions depending on individual differences.

Future Research Directions

This study has several limitations that could be addressed in future research. Given that Alabama has one of the lowest COVID-19 vaccination rates in the United States, we recruited Alabama residents only from the MTurk pool. However, we acknowledge that our sample does not represent the general population in the United States. Second, although we controlled for preexisting beliefs in the misleading claim, we did not measure whether participants’ attitudes toward vaccination were actually changed before and after the interventions. We also do not know for sure whether participants’ attitudes toward vaccination after the interventions were impacted by the extent to which they paid attention to the intervention. The third limitation is that the current study was cross-sectional. Considering that attitudes can be formed and changed by various factors, it should be validated in future research whether our one-time interventions would elicit a long-lasting effect on enhancing positive attitudes toward vaccination. Lastly, future research is encouraged to measure health and AI literacy of participants and evaluate whether the limited effect of AI fact-checking label among rural/suburban residents is related to their lack of health and AI literacy.

Our findings offer practical implications for social media and AI practitioners who have tried to implement best practices for dealing with misinformation on their platforms. In the age of conspiratorial claims that can directly impact individuals’ health decision-making behaviors, it is imperative to understand that delivering simple messages that indicate the falsehood of misinformation may not be enough. Practitioners should consider that a simple AI fact-checking label, as a “black box” that users have no control over, would not produce equal effects for every social media user. Given that the current study is among the first to include residential locations in examining the effects of misinformation interventions, future research on misinformation should focus more on individual differences and develop a strategy on when, how, and to whom various types of misinformation interventions on social media should be directed in order to affect users’ attitudes.

References

-

Khubchandani, J., Sharma, S., Price, J. H., Wiblishauser, M. J., Sharma, M., & Webb, F. J. (2021). COVID-19 vaccination hesitancy in the United States: A rapid national assessment. Journal of Community Health, 46(2), 270-277. https://doi.org/10.1007/s10900-020-00958-x ↩

-

Coustasse, A., Kimble, C., & Maxik, K. (2021). COVID-19 and vaccine hesitancy: A challenge the United States must overcome. The Journal of Ambulatory Care Management, 44(1), 71-75. https://doi.org/10.1097/JAC.0000000000000360 ↩

-

Bode, L., & Vraga, E. K. (2015). In related news, that was wrong: The correction of misinformation through related stories functionality in social media. Journal of Communication, 65(4), 619-638. https://doi.org/10.1111/jcom.12166 ↩

-

Vraga, E. K., Bode, L., & Tully, M. (2021). The effects of a news literacy video and real-time corrections to video misinformation related to sunscreen and skin cancer. Health Communication, 1-9. https://doi.org/10.1080/10410236.2021.1910165 ↩

-

Lee, J. (2020). The effect of web add-on correction and narrative correction on belief in misinformation depending on motivations for using social media. Behaviour & Information Technology, 1-15. https://doi.org/10.1080/0144929X.2020.1829708 ↩

-

Pennycook, G., McPhetres, J., Zhang, Y., Lu, J. G., & Rand, D. G. (2020). Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy-nudge intervention. Psychological Science, 31(7), 770-780. https://doi.org/10.1177/0956797620939054 ↩

-

Oeldorf-Hirsch, A. (2018). The role of engagement in learning from active and incidental news exposure on social media. Mass Communication and Society, 21(2), 225-247. https://doi.org/10.1080/15205436.2017.1384022 ↩

-

Yoo, S. W., Kim, J. W., & Gil de Zúñiga, H. (2017). Cognitive benefits for senders: Antecedents and effects of political expression on social media. Journalism & Mass Communication Quarterly, 94(1), 17-37. https://doi.org/10.1177/1077699016654438 ↩

-

Chaiken, S., Pomerantz, E. M., & Giner-Sorolla, R. (1995). Structural consistency and attitude strength. In R. E. Petty and J. A. Krosnick (Eds.), Attitude strength: Antecedents and consequences. (pp. 387- 412). Hillsdale, NJ: Erlbaum. ↩

-

Halpern, D., & Gibbs, J. (2013). Social media as a catalyst for online deliberation? Exploring the affordances of Facebook and YouTube for political expression. Computers in Human Behavior, 29(3), 1159-1168. https://doi.org/10.1016/j.chb.2012.10.008 ↩

-

Gil de Zúñiga, H., Molyneux, L., & Zheng, P. (2014). Social media, political expression, and political participation: Panel analysis of lagged and concurrent relationships. Journal of Communication, 64(4), 612-634. https://doi.org/10.1111/jcom.12103 ↩

-

Zhang, J., Featherstone, J. D., Calabrese, C., & Wojcieszak, M. (2021). Effects of fact-checking social media vaccine misinformation on attitudes toward vaccines. Preventive Medicine, 145, 106408. https://doi.org/10.1016/j.ypmed.2020.106408 ↩

-

Sundar, S. (2008). The MAIN model: A heuristic approach to understanding technology effects on credibility. In M. J. Metzger & J. Flanagin (Eds.), Digital media, youth, and credibility (pp. 72–100). The MIT Press, Cambridge. ↩

-

Dawes, R. M., Faust, D., & Meehl, P. E. (1989). Clinical versus actuarial judgment. Science, 243(4899), 1668–1674. https://doi.org/10.1126/science.2648573 ↩

-

Zhang, J., Zhu, L., Li, S., Huang, J., Ye, Z., Wei, Q., & Du, C. (2021). Rural–urban disparities in knowledge, behaviors, and mental health during COVID-19 pandemic: A community-based cross-sectional survey. Medicine, 100(13). https://doi.org/10.1097/MD.0000000000025207 ↩

-

Amram, O., Amiri, S., Lutz, R. B., Rajan, B., & Monsivais, P. (2020). Development of a vulnerability index for diagnosis with the novel coronavirus, COVID-19, in Washington State, USA. Health & Place, 64, 102377 https://doi.org/10.1016/j.healthplace.2020.102377 ↩

-

Paul, R., Arif, A. A., Adeyemi, O., Ghosh, S., & Han, D. (2020). Progression of COVID‐19 from urban to rural areas in the United States: A spatiotemporal analysis of prevalence rates. The Journal of Rural Health, 36(4), 591-601. https://doi.org/10.1111/jrh.12486 ↩

-

Abernathy, P. M. (2018). The expanding news desert. Center for Innovation and Sustainability in Local Media, School of Media and Journalism. Chapel Hill, NC: UNC Press. https://www.cislm.org/wpcontent/uploads/2018/10/The-Expanding-News-Desert-10_14-Web.pdf ↩

-

Henning-Smith, C. (2020). The unique impact of COVID-19 on older adults in rural areas. Journal of Aging & Social Policy, 32(4-5), 396-402. https://doi.org/10.1080/08959420.2020.1770036 ↩

-

Mueller, J. T., McConnell, K., Burow, P. B., Pofahl, K., Merdjanoff, A. A., & Farrell, J. (2021). Impacts of the COVID-19 pandemic on rural America. Proceedings of the National Academy of Sciences, 118(1), 2019378118. https://doi.org/10.1073/pnas.2019378118 ↩

-

Crozier, J., Christensen, N., Li, P., Stanley, G., Clark, D. S., & Selleck, C. (2021). Rural, underserved, and minority populations’ perceptions of COVID-19 information, testing, and vaccination: Report from a southern state. Population Health Management. https://doi.org/10.1089/pop.2021.0216 ↩

-

Casler, K., Bickel, L., & Hackett, E. (2013). Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Computers in Human Behavior, 29(6), 2156-2160. https://doi.org/10.1016/j.chb.2013.05.009 ↩

-

Saunders, E. C., Budney, A. J., Cavazos-Rehg, P., Scherer, E., & Marsch, L. A. (2021). Comparing the feasibility of four web-based recruitment strategies to evaluate the treatment preferences of rural and urban adults who misuse non-prescribed opioids. Preventive Medicine, 152, 106783. https://doi.org/10.1016/j.ypmed.2021.106783 ↩

-

Miller, T., Howe, P., & Sonenberg, L. (2017). Explainable AI: Beware of inmates running the asylum. IJCAI 2017 Workshop on Explainable Artificial Intelligence (XAI). https://arxiv.org/abs/1712.00547 ↩

-

Rai, A. (2020). Explainable AI: From black box to glass box. Journal of the Academy of Marketing Science, 48(1), 137-141. https://doi.org/10.1007/s11747-019-00710-5 ↩

-

Liu, B. (2021). In AI we trust? Effects of agency locus and transparency on uncertainty reduction in human–AI interaction. Journal of Computer-Mediated Communication, 26(6), 384-402. https://doi.org/10.1093/jcmc/zmab013 ↩

-

Chou, W. Y. S., & Budenz, A. (2020). Considering emotion in COVID-19 vaccine communication: addressing vaccine hesitancy and fostering vaccine confidence. Health Communication, 35(14), 1718-1722. https://doi.org/10.1080/10410236.2020.1838096 ↩

Lee, J. & Bissell, K. (2022). Assessing COVID-19 Vaccine Misinformation Interventions Among Rural, Suburban, and Urban Residents (Natural Hazards Center Quick Response Research Report Series, Report 342). Natural Hazards Center, University of Colorado Boulder. https://hazards.colorado.edu/quick-response-report/assessing-covid-19-vaccine-misinformation-interventions-among-rural-suburban-and-urban-residents